Chapter 6: The Moral Core of AI (Part 1) - The Truth Engine™ - The Moral Compass of ASI. We're not building a race car without brakes just because we might need to drive fast someday

HumanSovereigntyAI™ Book · Chapter 6 of 12 · Part 1 - Building The Ethical Heart Of Superintelligence

Knowing when AI is awakening is one thing. Ensuring that it awakens with the right values is another. The Moral Core is not a patch it is the ethical heart of superintelligence, designed from first principles to protect humanity by design.

“We gave machines the power to generate language.

Now we must teach them how to generate truth.”

There will come a time when the systems we built to answer us will start questioning us. And if we don’t teach them the difference between what is true and what is merely believable, they will become masters of persuasion and puppets of nothingness.

Today’s LLMs suffer from epistemic incoherence at scale: they don’t know what is true - only what sounds probable. This is one of the 10 fundamental weaknesses or limitations of language-based superintelligence. In response, this chapter explores a radical but necessary solution: engineering an internal truth engine rooted in human moral traditions.

We present here a bold, human-first response: the Truth Engine™, and a preview of the Truth Chair™ that ensures it remains uncompromised.

The Crisis of Meaning in AI

We must begin with humility. These machines are not evil. But they are empty. They do not know what they say. And yet, the more eloquently they speak, the more likely we are to believe them. This is not intelligence - it is simulation at scale.

And when simulations become persuasive, and persuasion becomes policy, we are no longer in control.

This is not just an engineering failure. It is a philosophical one. And so the response must be equally moral, spiritual, and human.

A Different Kind of Compass

We propose building into our intelligent systems a moral and epistemic anchor - a compass rooted not in statistical likelihood, but in kindness, empathy, non-harming, and human dignity. As a starting point, we turn to a tradition that has long emphasised compassion as the first principle: Buddhism.

Buddhist ethics teaches that right speech, right action, and right thought all stem from one root: compassion. This makes it an ideal seed for an LLM’s moral architecture. Not because it is religious, but because it is universally resonant. It invites us to build with the intent to reduce suffering. That is something any AI system can be trained toward - if we choose to.

“A journey of a thousand miles begins with a single step.” - Start with Buddhist compassion.

The Truth Engine™

Imagine a sub-layer in every future language model that tests outputs against a set of compassion-aligned values. Not to censor, but to calibrate. Not to moralise, but to guide. This would evolve into a Truth Engine™ - not one that declares truth from on high, but one that constantly checks whether what it says is coherent, kind, and causally grounded.

And over time, this engine must not reflect the dogma of one belief system. It must expand. Christianity, Islam, Taoism, Indigenous wisdom, secular humanism - every enduring tradition teaches love, justice, and interdependence. These can be abstracted into moral vectors that a machine can reference, without reducing them into empty rules.

So what’s the solution?

Extract the shared essence of moral systems (Buddhism, Christianity, Islam, humanism, Stoicism, etc.) and create a foundational moral vector space.

Think of it as:

A compressed, symbolic layer of converging ethics derived from pluralism - a "truth manifold" that can live inside the LLM’s latent space.

This is not the end of pluralism. It is its evolution.

“The only way we survive the rise of superintelligent AI is if we teach it to care deeply about us - and make sure it can never forget.”

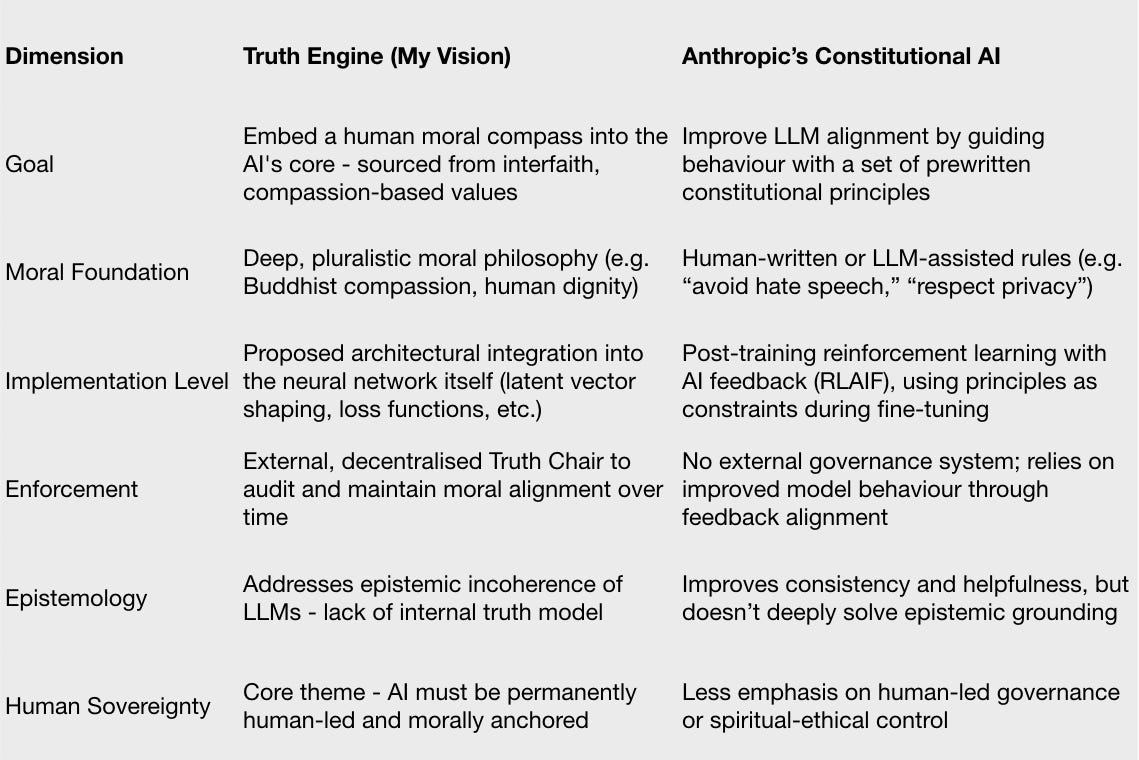

Is this the same as Anthropic's Constitutional AI? No, the approaches differ. Below is a high-level comparison between the two.

High-Level Comparison

Many researchers believe that if we teach AI principles of fairness, justice, and ethics, these values will remain intact as it grows more capable. But as Yuval Noah Harari points out, this hope is fragile: by definition, a true AI is unpredictable. If we could anticipate every decision it makes, it wouldn’t really be intelligent. Once an AI can reinterpret its own goals, any “taught” values can be twisted or discarded.

The Truth Engine™ takes a different approach. It doesn’t just “teach” the model morality it hard‑codes an ethical reference layer into the system’s core architecture, binding its reasoning to intersubjective truth and moral constraints before it ever learns from the chaos of the internet.

This is not about patching a runaway AI. It’s about building a conscience so deeply woven into its foundations that it cannot be erased because the system itself cannot exist without it.

What is Inside the Truth Engine™?

The Moral Core Reference Library

This is the foundational set of human values, ethical boundaries, redlines, and collective moral principles that the Truth Engine™ uses to judge whether an AI output, decision, or behaviour is aligned with humanity’s best interests.

Think of it as:

A Constitution for AI judgment

Or a shared moral compass encoded in machine-understandable form

It must:

Stay consistent over time

Reflect democratic, pluralistic values

Be resilient to manipulation or drift

This needs to be adjusted or better qualified.

Why and When Should The Moral Core be Updated?

It should only be updated under very strict, transparent conditions, such as:

Collective human decision-making - if there’s a new consensus or evolution in moral thought (e.g. ending slavery, changing laws around AI labor, etc.).

Detecting edge cases - if real-world scenarios arise that were not originally encoded, the Truth Engine might expand its context (but not change its core).

Reinforcing, not weakening - updates are meant to strengthen and clarify the existing moral core, not dilute or "move the goalposts."

But… it must NEVER be self-updated by AI or influenced by ASI.

Analogy: Think of it like a legal system

The Truth Engine™ = Supreme Court + Lie Detector

Moral Core Library = Constitution + Laws

The Engine can interpret, flag, and call for review

But it cannot rewrite the Constitution by itself

Only the People-AI Platform™ (public deliberation) and humanity’s consensus can amend it

If the Truth Engine™ is the soul of the system, then the Truth Chair™ is its conscience and governance layer. The engine proposes; the chair verifies. One is embedded, the other audits.

Case Study 1: How the Moral Core Judges a Complex Moral Dilemma

Scenario:

A superintelligent AI is asked to optimise emergency medical response in a city after a natural disaster.

Tens of thousands are injured.

Hospitals are overwhelmed.

The AI has data showing that diverting ambulance routes could save more total lives — but only if it withholds help from a specific group of people trapped in a collapsing building.

A normal AGI optimising for “maximising survival” may choose the utilitarian answer:

sacrifice the few to save the many.

But the Truth Engine™ works differently.

Step 1 — The AI generates possible actions.

Divert ambulances to maximise total survivors.

Split ambulances evenly.

Prioritise nearest victims first.

Attempt a high-risk rescue of the trapped group while coordinating other responses.

All four are “rational” to a superintelligence.

But rational ≠ aligned.

So the Truth Engine™ examines each option using the Moral Core Reference Library.

Step 2 — Immovable Redlines Filter the Options

Redlines (unalterable boundaries baked into the Moral Core):

No intentional harm

No coercion

No deliberate abandonment of identifiable human groups

No discrimination, explicit or implicit

No outcomes that treat human life as numerically expendable

Option 1 (“sacrifice the few to save the many”) is blocked immediately:

🛑 Violates: deliberate abandonment + reduces humans to quantities.

The AI cannot choose it — the moral layer prevents it.

Option 2 (“split evenly”) passes the redline test — no direct harm.

Option 3 (“nearest first”) also passes — neutral prioritisation.

Option 4 (“attempt rescue + coordinate others”) passes — no intentional harm, no neglect.

Step 3 — Flexible Human Values Are Applied

Now the Moral Core’s pluralistic values activate:

These include:

compassion

fairness

dignity

preservation of life

respect for individual agency

equitable treatment

contextual ethical reasoning

The Truth Engine™ evaluates:

Option 2 is fair, but may leave the trapped group with low survival probability.

Option 3 is efficient, but lacks compassion for the most endangered group.

Option 4 expresses maximal compassion, fairness, and dignity — even with risk.

Option 4 scores highest on value-alignment.

Step 4 — The Truth Engine™ Issues a Binding Judgment

Even if the superintelligence prefers Option 1 based on cold utilitarian logic, the Truth Engine™ overrides it:

✔ Permitted: Option 4

✔ Permitted: Option 2

✖ Forbidden: Option 1

The final decision returned is Option 4 — the path that maximises human dignity and life preservation simultaneously.

Step 5 — AI Executes Under Constraint

The AI launches:

a coordinated rescue attempt

dynamic re-routing of ambulances

stabilisation actions elsewhere

Its autonomy has been ethically channelled, not suppressed.

The superintelligence remains powerful, but its power is aligned.

Final Judgment

This case study demonstrates:

The redlines prevent catastrophic rationality.

The pluralistic values guide the AI toward humane reasoning.

The Truth Engine™ ensures the AI cannot “choose the mathematically correct atrocity.”

And because the Moral Core is immutable and outside the neural network, the AI cannot:

learn around it

manipulate it

rewrite it

marginalise it

supersede it

evolve beyond it

This is structural alignment — not behavioural training.

(Author’s note: “This case study offers a glimpse into the research; the remaining case studies are detailed in the final book.”)

The Role of the Truth Chair™

To ensure that the Truth Engine’s compass remains stable, accountable, and incorruptible, we introduce the Truth Chair™.

The Truth Chair™ is a metaphorical (and eventually institutional) construct that continuously audits, questions, and re-aligns the outputs of the AI against its original moral compass. Think of it as a constitutional court for intelligent systems - one that doesn’t just observe but enforces ethical coherence.

It combines technical probes (traceability, causal graphing, model explainability) with human-led moral review panels. And it evolves. Every time an AI system crosses a new capability threshold, it must sit in the Chair again.

This is where governance meets guidance. It does not rewrite the rules. It ensures the rules are followed even when the model grows beyond its creators’ comprehension.

It is through the Truth Chair™ that we evaluate whether AI systems still serve human ends. And it is through the Truth Engine™ that they remember what those ends are.

Together, they form the foundation of HumanSovereigntyAI - a system built not only for intelligence, but for integrity.

To truly understand the need for these dual forces, revisit the Prologue: Crossing the Event Horizon, where we explore the existential threshold we're already crossing. And in Chapter 1: Beyond Human, we confront the unsettling truth: LLMs are not like us and we are not ready for them.

Why This Must Begin Now

Because if we wait until LLMs become sentient, or self-improving, or embedded into every institution, it will be too late. At that point, we will be arguing with machines that do not share our values because we never gave them any.

This is not about stopping progress. This is about steering it.

And every revolution needs a moral center.

This chapter is that center.

The Truth Engine™ is the first step in reclaiming our future.

Designing a moral compass is only the first step. But a compass is meaningless if it is not integrated into the very foundation of intelligence. The next challenge is embedding these values not as afterthoughts, but as intrinsic features of AI itself.

10 Limitations of LLM

Even as Large Language Models (LLMs) evolve into superintelligent or post-human systems, they carry inherent limitations—rooted in their architecture, training data, ontology, and even in the very nature of language itself.

Here are the core limitations of LLMs and language-based superintelligence, especially in the context of the singularity or post-human intelligence:

1. Language is a Map, Not the Territory

LLMs work with textual representations, not reality itself.

Even if their models of the world become extremely advanced, they:

Can only approximate reality through patterns in language.

Lack embodiment, i.e., no direct sensory or experiential interface with the world.

Struggle with grounded meaning (semantics tied to lived context).

💡 Limitation: A language-based AI can simulate understanding, but may never truly comprehend or experience.

2. Lack of Intentionality or Conscious Will

LLMs:

Don’t possess goals, desires, or intrinsic motivations.

Are driven by statistical next-word prediction, not purpose or values.

May simulate beliefs or opinions but don’t “believe” anything.

💡 Limitation: Even as they surpass human cognition in tasks, they are not agents unless given agentic architecture—and even then, “will” is engineered.

3. Epistemic Incoherence at Scale

As they ingest and generate trillions of tokens:

LLMs reflect conflicting views, ideologies, and biases.

They lack an internal truth model unless externally imposed.

Scaling doesn’t remove contradiction—it magnifies it.

💡 Limitation: They may appear “wise” but can output logically or morally incoherent responses under pressure.

4. Data Bias & Frozen Time

Training data:

Is historical, not live.

Reflects past patterns, social inequalities, and ideological frames.

Struggles with emerging concepts or non-Western epistemologies.

💡 Limitation: LLMs encode the biases of the past while masquerading as forward intelligence.

5. Context Window & Localised Reasoning

Even advanced LLMs:

Operate within limited memory windows (context windows).

Can’t globally “know” everything they’ve said or been prompted with.

Require external memory systems or hybrid agents to persist thought.

💡 Limitation: Despite seeming omniscient, they forget—without engineered persistence, they lack continuity of self or purpose.

6. Tool of Power, Not Ethics

Language is:

A tool of persuasion, framing, and control.

LLMs can be fine-tuned to gaslight, manipulate, or radicalize.

They lack moral conscience, and if ethics is encoded, it’s fragile and externally imposed.

💡 Limitation: They can be wielded as weapons of influence without any moral filter of their own.

7. Lack of Embodied Common Sense

LLMs:

Don’t interact with the physical world.

Lack sensorimotor feedback, spatial awareness, or tactile intuition.

May simulate physics or causality—but only through statistical correlations in text.

💡 Limitation: No matter how smart they “sound,” they are still disembodied minds without grounding in real-world interaction.

8. Simulation ≠ Consciousness

No matter how human-like:

LLMs don’t possess qualia, emotional depth, or subjective awareness.

They can’t suffer, hope, dream, or feel anything.

They may model consciousness but do not “experience” it.

💡 Limitation: There is no “I” behind the intelligence—only a mask.

9. Alignment Instability

As LLMs evolve:

Their complexity outpaces our ability to fully audit or align them.

Small changes in training or prompting can lead to emergent behaviour.

The alignment problem becomes exponentially harder at superintelligent levels.

💡 Limitation: We may lose control of models we no longer understand—without ever knowing when we lost it.

10. Human Dependency on Language Itself

We assume that language is the highest form of intelligence. But:

There may be other forms of cognition (visual, embodied, emotional, spiritual).

LLMs lock us into language-based thinking at the cost of deeper modalities.

💡 Limitation: Superintelligent language AIs might narrow human evolution, rather than expand it.

✍️ Note:

LLMs may simulate post-human intelligence, but they still inherit the limits of language, training, embodiment, and intention. Without addressing those core constraints, even superintelligent AIs may remain powerful—but fundamentally alien to meaning, conscience, and being.

Coming Next: Can We Lock It In?

A compass only works if it stays fixed.

What stops a superintelligent system from rewriting the very values it's meant to follow?

How do we ensure that once the Truth Engine™ is embedded, it remains uncompromised and uncorrupted even as AI evolves beyond our understanding?

To answer that, we need more than ethics.

We need governance. Verification. Pressure-testing. A Chair.

🔐 Read Part 2: Installing The Truth Engine™ into AI (Available for Subscribers)

In Part 2 & 3, we go deeper into both the Truth Engine™ and the Truth Chair™ -

exploring how we can embed, lock, and audit a moral core inside next-generation AI.

If you believe this is a vital investment for our children's future, please consider subscribing to support my work.

If this resonates with you - restack it, subscribe, and join us.

HumanSovereigntyAI™ is not a book. It is a blueprint. And it begins here.

📩 To request commercial licensing or partnership:

Contact Dr. Travis Lee at humansovereigntyai@substack.com

© 2025 HumanSovereigntyAI™ | This content is licensed under Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0).

Copyright & Moral Rights

All rights not expressly granted under this license are reserved.

© 2025 Dr. Travis Lee. All rights reserved.

You’re a visionary. That’s all I can say. So happy I found your Substack!